Casebrief: Avalanche Resque

- Figma fileAvalanche Resque

Avalanche Resque

Saving victims of avalanches using AR, increasing their chances of survival

Challenge

After 30 minutes of being under the snow from an avalanche, the chance of survival decreases rapidly, which often

causes brain damage or worse injuries to the body. Saving a victim within 30 minutes is currently extremely difficult for a

rescue team, using the equipment that they have at the moment.

The challenge was to: create an Augmented Reality (AR) interface that would allow the resque team to save a victim of an avalanche faster, increasing the victims chances of survival.

My part

Avalanche resque is an individual project, so I had full responsibility for the entire workflow, starting from conceptualization through to the creation of the high-fidelity design flow, the UX and UI, but also the protoyping process by making an AR prototype in Unity.

The technology

I did research to which technlogies I could use and how that works, if it works in snowy conditions, how exact that technology can show its data and if that is usefull enouhg for showing the resque team exactly where the victim is.

Ground penetrating Radar (GPR) is the technology I discovered to be the most usefull, as it can show the exact location of the victim and can dig deep enough into a snowy terrain to be able to know fast enough where a body is hiding and how many there are. It also shows data in a really nice way for us to read and show to the resque team.

How it works

So to get this data fast, we need make sure that, in all kinds of snow conditions, the GPR is accurate enough while being fast. I found that the best way to do that is using big and heavy drones that can fly over the avalanche with a high speed, while being steady enough to measure the data with the GPR on its bottom.

This way, we can get to the data really fast, while the resque team is safely in the helicopter. And then after they get out of the helicopter, they can get to the victim immediately using AR wayfinding patterns.

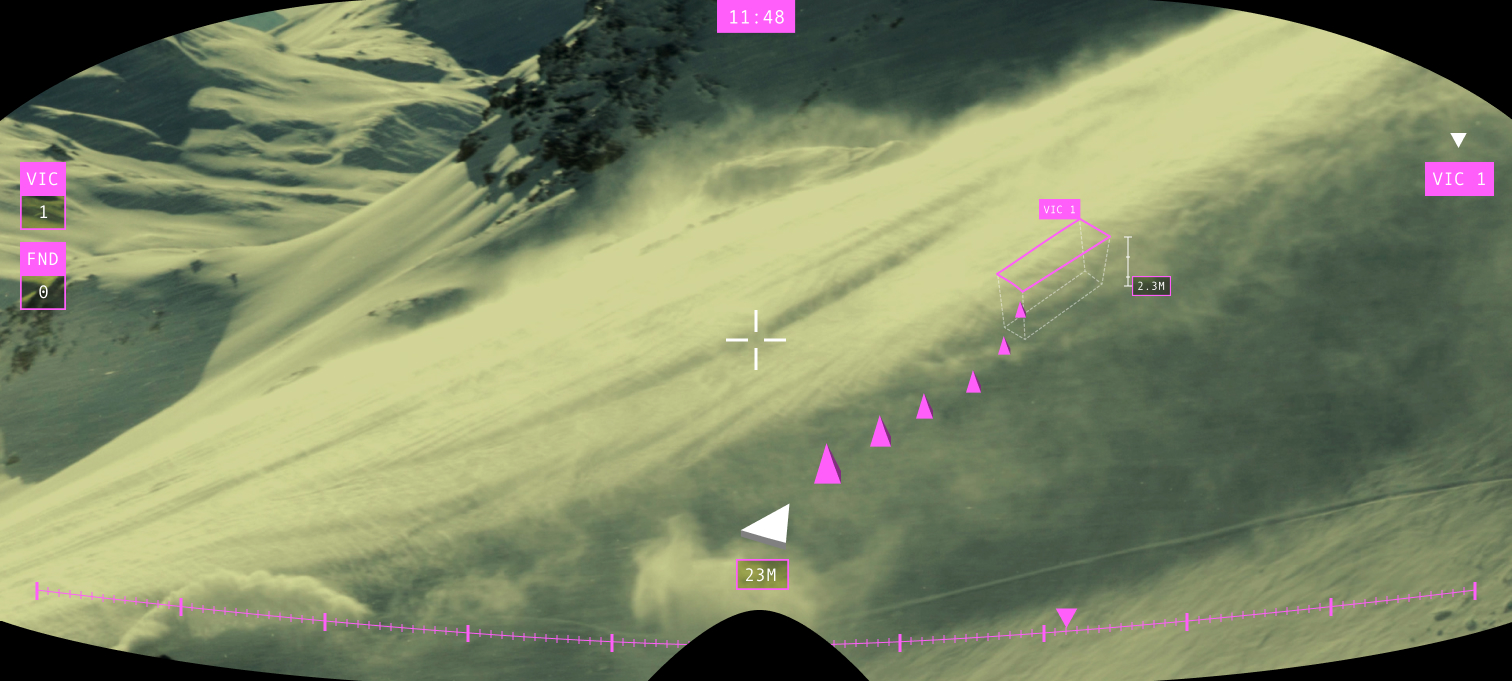

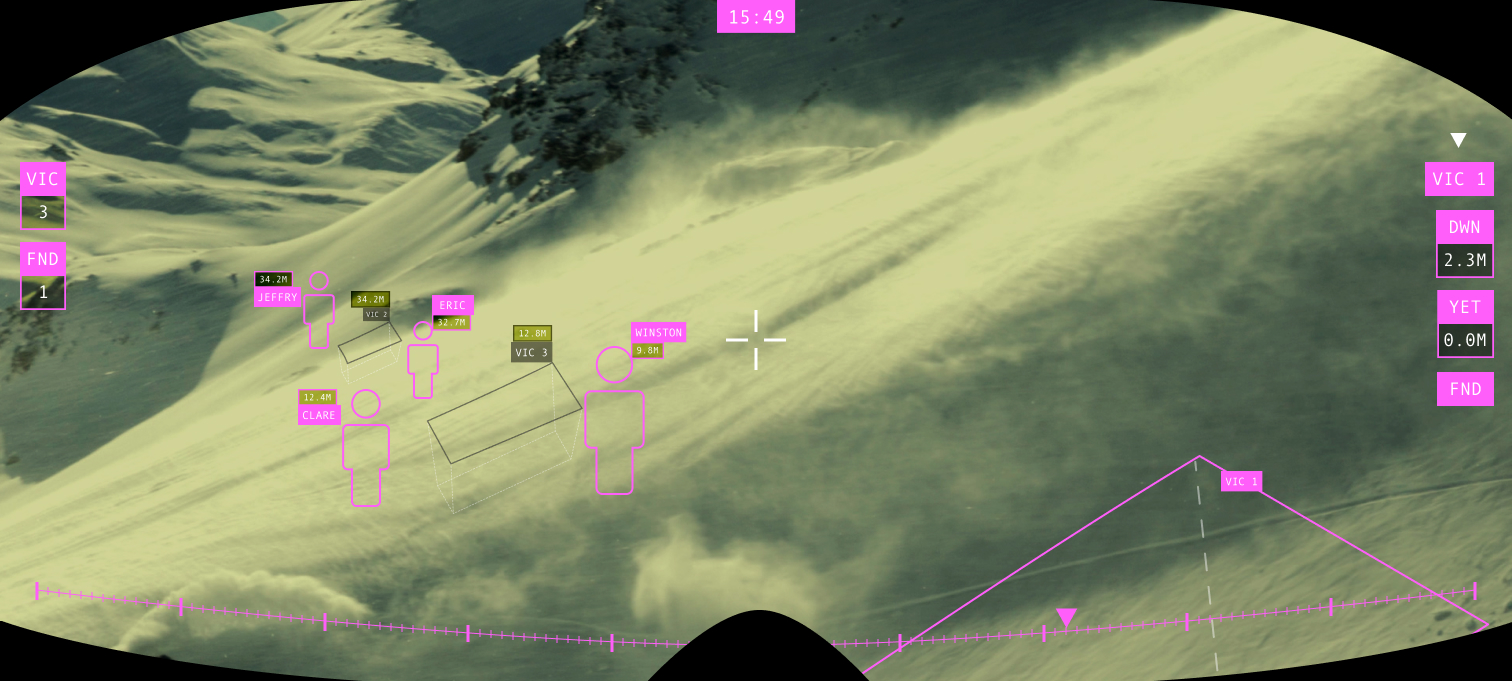

How the data is shown in AR and how to navigate to it

The data can be seen in the interface inside of the goggles. I made a difference between the importance of the data in different timings of the resque using the MoSCoW method and made a wireflow to know where which part of the resque started. This way I could make wireframes and decide which information has to be shown on the interface at each moment.

Then I thought about how to get to the information that is not currently on the screen for when a user wants to be able to. with wearing thick gloves it is difficult to click on something accurately. I decided to not make a clickable button, but to be able to get to the side information by making gestures that are getting tracked by a sensor around the wrist inside the gloves.

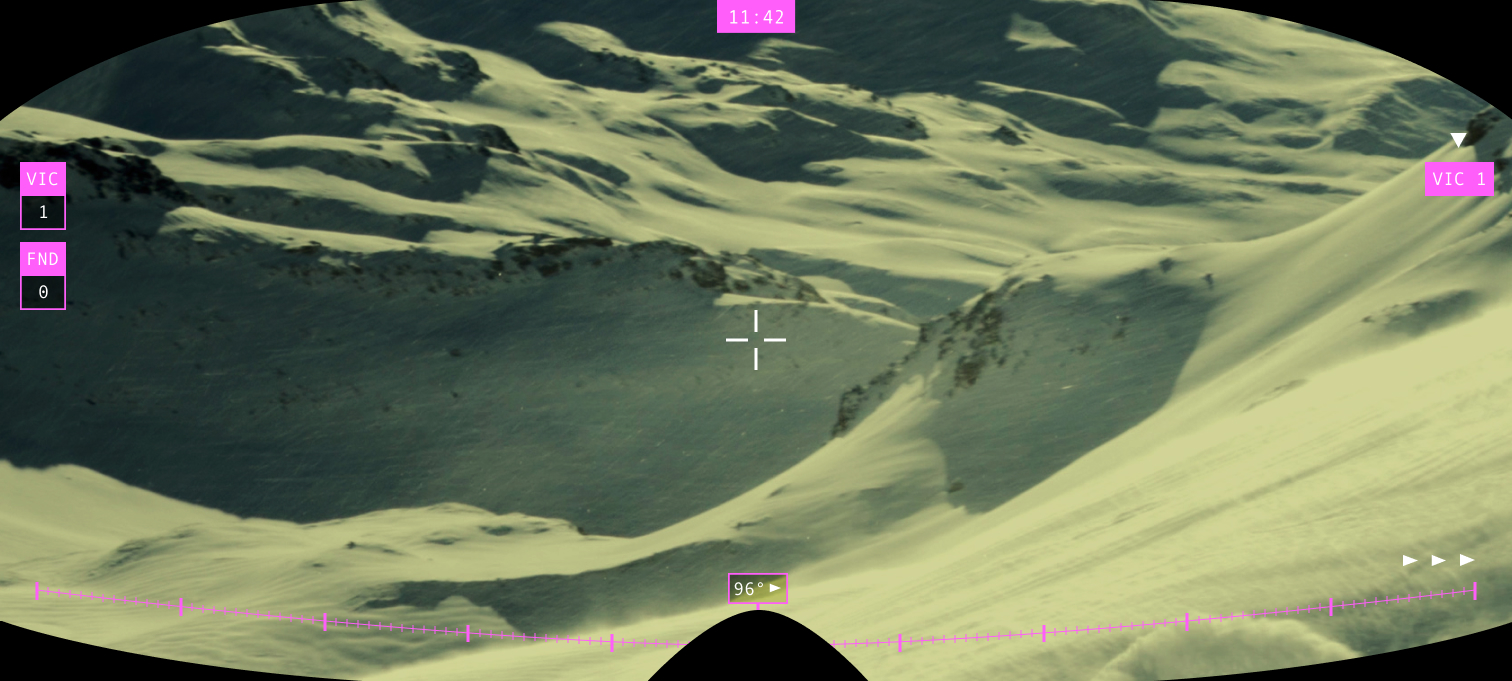

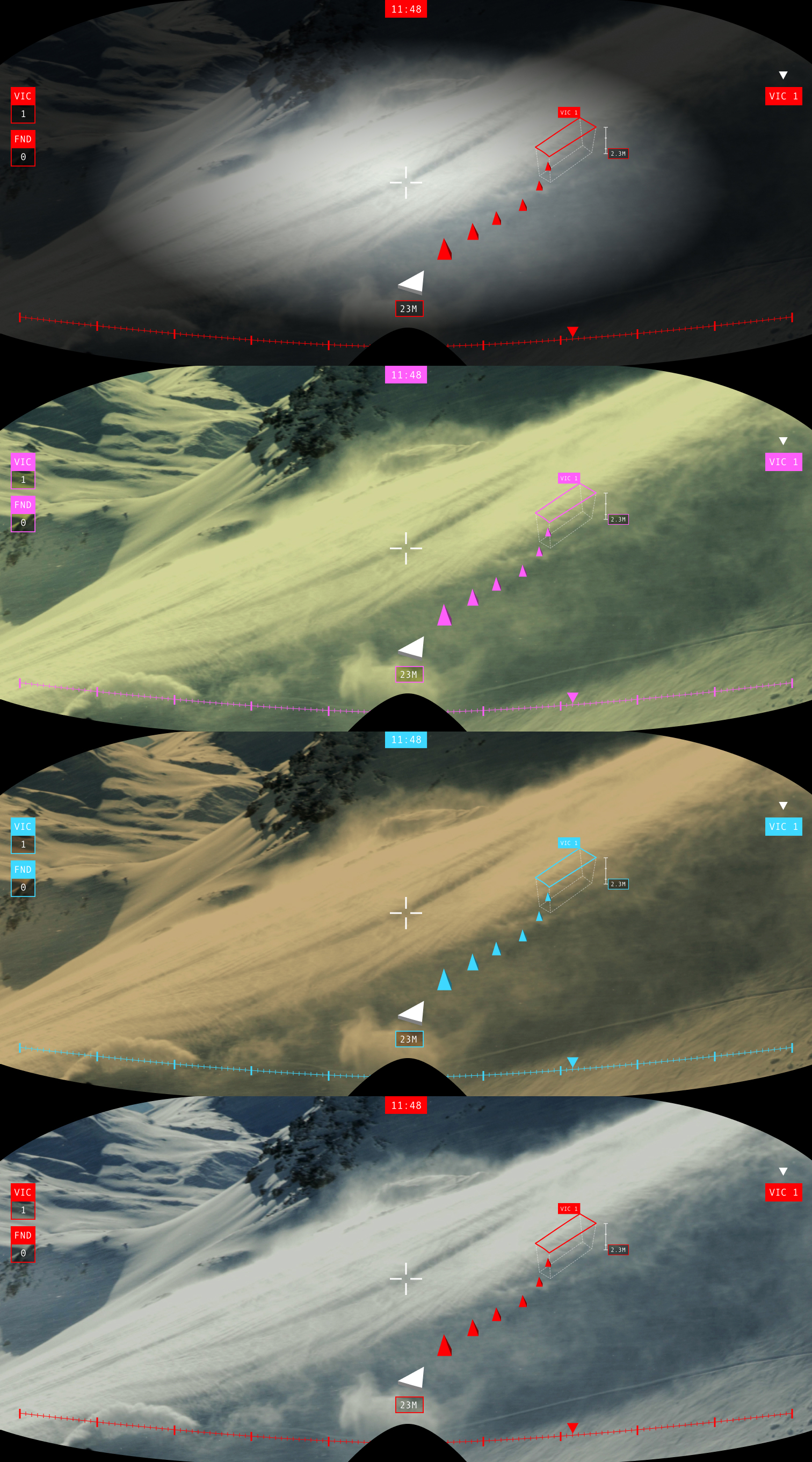

The lenses

Because of the snowy mountain, the weather can be variable and the resque team would need to be able to see in any of those conditions. The lenses need to be swappable and have different lens colors. This way the resque team can see in all conditions, but with those different colors, the UI needs to change it's colors depending of which lens is used.

Color theory

I researched all different lenses that can be used and ended up with 4 different weather conditions that need it's own kind of lens. We need a night lens (clear), a sun lens (darker tone), a snowstorm lens (yellow) and a cloud lens (orange).

I tested those 4 lenses in front of the different weather conditions, and picked the color with the most contrast to that color. I did that using the opposite color in the color wheel.

Wayfinding in AR

The resque team can navigate to the victim using an AR wayfinding pattern in a 3D space. This way they can get to the victim as fast as possible without losing track of their location and victims.

Like that they can overall more efficient in saving a victim within this 30 minute window, and reduce the chance of a victim being injured badly or killed.

Want to see some more?

WTFFF!?

Front End